Connecting a custom web-service to the machine learning functionality

Glossary Item Box

Introduction

Creatio 7.16.2 and up supports connecting custom web-services to the machine learning functionality.

You can implement typical machine learning problems (classification, scoring, numerical regression) or other similar problems (for example, customer churn forecast) using a custom web-service. This article covers the procedure for connecting a custom web-service implementation of a prediction model to Creatio.

The main principles of the machine learning service operation are covered in the “Machine learning service” article.

The general procedure of connecting a custom web-service to the machine learning service is as follows:

- Create a machine learning web-service engine.

- Expand the problem type list of the machine learning service.

- Implement a machine learning model.

Create a machine learning web-service engine

A custom web-service must implement a service contract for model training and making forecasts based on an existing prediction model. You can find a sample Swagger service contract of the Creatio machine learning service at:

https://demo-ml.bpmonline.com/swagger/index.html#/MLService

Required methods:

- /session/start – starting a model training session.

- /data/upload – uploading data for an active training session.

- /session/info/get – getting the status of the training session.

- <training start custom method> – a user-defined method to be called by Creatio when the data upload is over. The model training process must not be terminated until the execution of the method is complete. A training session may last for an indefinite period (minutes or even hours). When the training is over, the /session/info/get method will return the training session status: either Done or Error depending on the result. Additionally, if the model is trained successfully, the method will return a model instance summary (ModelSummary): metrics type, metrics value, instance ID, and other data.

- <Prediction custom method> – an arbitrary signature method that will make predictions based on a trained prediction model referenced by the ID.

Web-service development with the Microsoft Visual Studio IDE is covered in the “Developing the configuration server code in the user project” article.

Expanding the problem type list of the machine learning service

To expand the problem type list of the Creatio machine learning service, add a new record to the MLProblemType lookup. You must specify the following parameters:

- Service endpoint Url – the URL endpoint for the online machine learning service.

- Training endpoint – the endpoint for the training start method.

- Prediction endpoint – the endpoint for the prediction method.

You will need the ID of the new problem type record to proceed. Look up the ID in the [dbo.MLProblemType] DB table.

Implementing a machine learning model

To configure and display a machine learning model, you may need to extend the MLModelPage mini-page schema.

Implementing IMLPredictor

Implement the Predict method. The method accepts data exported from the system by object (formatted as Dictionary <string, object>, where key is the field name and value is the field value), and returns the prediction value. This method may use a proxy class that implements the IMLServiceProxy interface to facilitate web-service calls.

Implementing IMLEntityPredictor

Initialize the ProblemTypeId property with the ID of the new problem type record created in the MLProblemType lookup. Additionally, implement the following methods:

- SaveEntityPredictedValues – the method retrieves the prediction value and saves it for the system entity, for which the prediction process is run. If the returned value is of the double type or is similar to classification results, you can use the methods provided in the PredictionSaver auxiliary class.

- SavePrediction (optional) – the method saves the prediction value with a reference to the trained model instance and the ID of the entity (entityId). For basic problems, the system provides the MLPrediction and MLClassificationResult entities.

Extending IMLServiceProxy and MLServiceProxy (optional)

You can extend the existing IMLServiceProxy interface and the corresponding implementations in the prediction method of the current problem type. In particular, the MLServiceProxy class provides the Predict generic method that accepts contracts for input data and for prediction results.

Implementing IMLBatchPredictor

If the web-service is called with a large set of data (500 instances and more), implement the IMLBatchPredictor interface. You must implement the following methods:

- FormatValueForSaving – returns a converted prediction value ready for database storage. In case a batch prediction process is running, the record is updated using the Update method rather than Entity instances to speed up the process.

- SavePredictionResult – defines how the system will store the prediction value per entity. For basic ML problems, the system provides MLPrediction and MLClassificationResult objects.

Case description

Connect a custom implementation of predictive scoring to Creatio

Source code

You can download the package with an implementation of the case using the following link.

Case implementation algorithm

1. Create a machine learning web-service engine

A sample implementation of a machine learning web service for ASP.Net Core 3.1 can be downloaded here.

Implement the MLService machine learning web-service.

Declare the required methods:

- /session/start

- /data/upload

- /session/info/get

- /fakeScorer/beginTraining

- /fakeScorer/predict

The complete source code of the module is available below:

namespace FakeScoring.Controllers { using System; using System.Collections.Generic; using System.Linq; using System.Net; using Microsoft.AspNetCore.Mvc; using Terrasoft.ML.Interfaces; using Terrasoft.ML.Interfaces.Requests; using Terrasoft.ML.Interfaces.Responses; [ApiController] [Route("")] public class MLService : Controller { public const string FakeModelInstanceUId = "{BFC0BD71-19B1-47B1-8BC4-D761D9172667}"; private List<ScoringOutput.FeatureContribution> GenerateFakeContributions(DatasetValue record) { var random = new Random(42); return record.Select(columnValue => new ScoringOutput.FeatureContribution { Name = columnValue.Key, Contribution = random.NextDouble(), Value = columnValue.Value.ToString() }).ToList(); } [HttpGet("ping")] public JsonResult Ping() { return new JsonResult("Ok"); } /// <summary> /// Handshake request to service with the purpose to start a model training session. /// </summary> /// <param name="request">Instance of <see cref="StartSessionRequest"/>.</param> /// <returns>Instance of <see cref="StartSessionResponse"/>.</returns> [HttpPost("session/start")] public StartSessionResponse StartSession(StartSessionRequest request) { return new StartSessionResponse { SessionId = Guid.NewGuid() }; } /// <summary> /// Uploads training data. /// </summary> /// <param name="request">The upload data request.</param> /// <returns>Instance of <see cref="JsonResult"/>.</returns> [HttpPost("data/upload")] public JsonResult UploadData(UploadDataRequest request) { return new JsonResult(string.Empty) { StatusCode = (int)HttpStatusCode.OK }; } /// <summary> /// Begins fake scorer training on uploaded data. /// </summary> /// <param name="request">The scorer training request.</param> /// <returns>Simple <see cref="JsonResult"/>.</returns> [HttpPost("fakeScorer/beginTraining")] public JsonResult BeginScorerTraining(BeginScorerTrainingRequest request) { // Start training work here. It doesn't have to be done by the end of this request. return new JsonResult(string.Empty) { StatusCode = (int)HttpStatusCode.OK }; } /// <summary> /// Returns current session state and model statistics, if training is complete. /// </summary> /// <param name="request">Instance of <see cref="GetSessionInfoRequest"/>.</param> /// <returns>Instance of <see cref="GetSessionInfoResponse"/> with detailed state info.</returns> [HttpPost("session/info/get")] public GetSessionInfoResponse GetSessionInfo(GetSessionInfoRequest request) { var response = new GetSessionInfoResponse { SessionState = TrainSessionState.Done, ModelSummary = new ModelSummary { DataSetSize = 100500, Metric = 0.79, MetricType = "Accuracy", TrainingTimeMinutes = 5, ModelInstanceUId = new Guid(FakeModelInstanceUId) } }; return response; } /// <summary> /// Performs fake scoring prediction. /// </summary> /// <param name="request">Request object.</param> /// <returns>Scoring rates.</returns> [HttpPost("fakeScorer/predict")] public ScoringResponse Predict(ExplainedScoringRequest request) { List<ScoringOutput> outputs = new List<ScoringOutput>(); var random = new Random(42); foreach (var record in request.PredictionParams.Data) { var output = new ScoringOutput { Score = random.NextDouble() }; if (request.PredictionParams.PredictContributions) { output.Bias = 0.03; output.Contributions = GenerateFakeContributions(record); } outputs.Add(output); } return new ScoringResponse { Outputs = outputs }; } } }

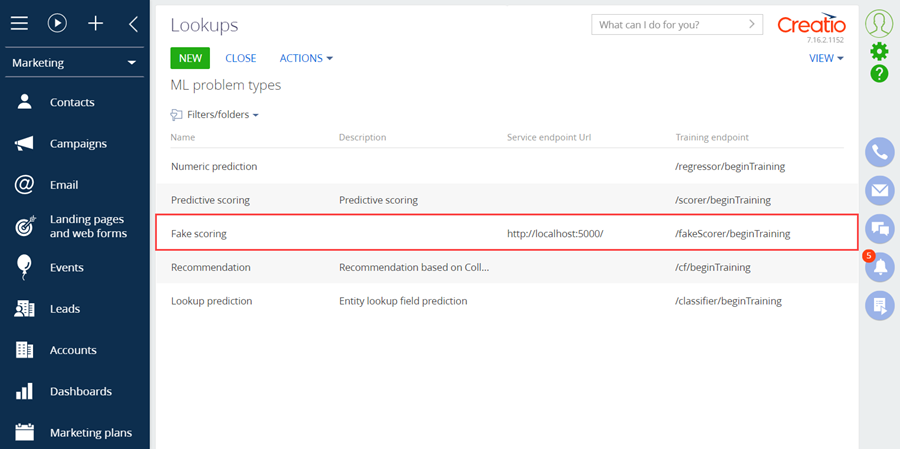

2. Expand the problem list of the machine learning service.

To expand the problem list:

- Open the System Designer by clicking

. Go to the [System setup] block –> click [Lookups].

. Go to the [System setup] block –> click [Lookups]. - Select the [ML problem types] lookup.

- Add a new record.

In the record, specify (Fig.1):

- [Name] – "Fake scoring".

- [Service endpoint Url] – "http://localhost:5000/".

- [Training endpoint] – "/fakeScorer/beginTraining".

Fig. 1. Setting up the parameters of the problem type

The ID of the added record is 319c39fd-17a6-453a-bceb-57a398d52636.

3. Implement a machine learning model

Execute the [Add] –> [Additional] –> [Schema of the Edit Page View Model] menu command on the [Schemas] tab in the [Configuration] section of the custom package. The newly created module should inherit the MLModelPage base page functionality defined in the ML package. Specify this schema as the parent one for a new schema.

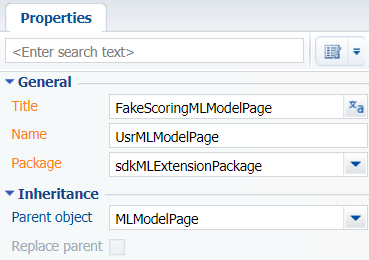

Specify the following parameters for the created object schema (Fig. 2):

- [Title] – “FakeScoringMLModelPage”.

- [Name] – "UsrMLModelPage".

- [Parent object] – select “MLModelPage”.

Fig. 2. Setting up the mini-page view model schema

Overload the getIsScoring base method to make the style of the new mini-page identical to the mini-page for creating a predictive scoring model. The complete source code of the module is available below:

Type your example code here. It will be automatically colorized when you switch to Preview or build the help system.

After making changes, save and publish the schema.

Go to the [Advanced settings] section -> [Configuration] -> Custom package -> the [Schemas] tab. Click [Add] -> [Source code]. Learn more about creating a schema of the [Source Code] type in the “Creating the [Source code] schema” article.

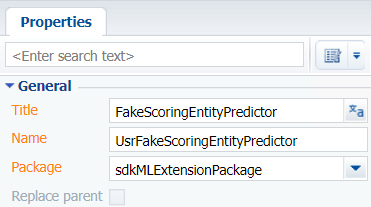

Specify the following parameters for the created object schema (Fig. 3):

- [Title] – “FakeScoringEntityPredictor”.

- [Name] – "UsrFakeScoringEntityPredictor".

Fig. 3. – Setting up the [Source Code] type object schema

Implement the Predict method that accepts data exported from the system by object and returns the prediction value. The method uses a proxy class that implements the IMLServiceProxy interface to facilitate web-service calls.

Initialize the ProblemTypeId property with the ID of the new problem type record created in the MLProblemType lookup and implement the SaveEntityPredictedValues and SavePrediction methods. The complete source code of the module is available below:

namespace Terrasoft.Configuration.ML { using System; using System.Collections.Generic; using Core; using Core.Factories; using Terrasoft.ML.Interfaces; [DefaultBinding(typeof(IMLEntityPredictor), Name = "319C39FD-17A6-453A-BCEB-57A398D52636")] [DefaultBinding(typeof(IMLPredictor<ScoringOutput>), Name = "319C39FD-17A6-453A-BCEB-57A398D52636")] public class FakeScoringEntityPredictor: MLBaseEntityPredictor<ScoringOutput>, IMLEntityPredictor, IMLPredictor<ScoringOutput> { public FakeScoringEntityPredictor(UserConnection userConnection) : base(userConnection) { } protected override Guid ProblemTypeId => new Guid("319C39FD-17A6-453A-BCEB-57A398D52636"); protected override ScoringOutput Predict(IMLServiceProxy proxy, MLModelConfig model, Dictionary<string, object> data) { return proxy.FakeScore(model, data, true); } protected override List<ScoringOutput> Predict(MLModelConfig model, IList<Dictionary<string, object>> dataList, IMLServiceProxy proxy) { return proxy.FakeScore(model, dataList, true); } protected override void SaveEntityPredictedValues(MLModelConfig model, Guid entityId, ScoringOutput predictedResult) { var predictedValues = new Dictionary<MLModelConfig, double> { { model, predictedResult.Score } }; PredictionSaver.SaveEntityScoredValues(model.EntitySchemaId, entityId, predictedValues); } protected override void SavePrediction(MLModelConfig model, Guid entityId, ScoringOutput predictedResult) { PredictionSaver.SavePrediction(model.Id, model.ModelInstanceUId, entityId, predictedResult.Score); } } }

After making changes, save and publish the schema.

Go to the [Advanced settings] section -> [Configuration] -> Custom package -> the [Schemas] tab. Click [Add] -> [Source code]. Learn more about creating a schema of the [Source Code] type in the “Creating the [Source code] schema” article.

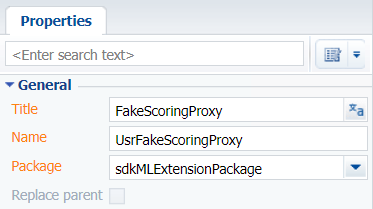

Specify the following parameters for the created object schema (Fig. 4):

- [Title] – "FakeScoringProxy".

- [Name] – "UsrFakeScoringProxy".

Fig. 4. – Setting up the [Source Code] type object schema

Extend the existing IMLServiceProxy interface and the corresponding implementations in the prediction method of the current problem type. In particular, the MLServiceProxy class provides the Predict generic method that accepts contracts for input data and prediction results.

The complete source code of the module is available below:

namespace Terrasoft.Configuration.ML { using System; using System.Collections.Generic; using System.Linq; using Terrasoft.ML.Interfaces; using Terrasoft.ML.Interfaces.Requests; using Terrasoft.ML.Interfaces.Responses; public partial interface IMLServiceProxy { ScoringOutput FakeScore(MLModelConfig model, Dictionary<string, object> data, bool predictContributions); List<ScoringOutput> FakeScore(MLModelConfig model, IList<Dictionary<string, object>> dataList, bool predictContributions); } public partial class MLServiceProxy : IMLServiceProxy { public ScoringOutput FakeScore(MLModelConfig model, Dictionary<string, object> data, bool predictContributions) { ScoringResponse response = Predict<ExplainedScoringRequest, ScoringResponse>(model.ModelInstanceUId, new List<Dictionary<string, object>> { data }, model.PredictionEndpoint, 100, predictContributions); return response.Outputs.FirstOrDefault(); } public List<ScoringOutput> FakeScore(MLModelConfig model, IList<Dictionary<string, object>> dataList, bool predictContributions) { int count = Math.Min(1, dataList.Count); int timeout = Math.Max(ScoreTimeoutSec * count, BatchScoreTimeoutSec); ScoringResponse response = Predict<ScoringRequest, ScoringResponse>(model.ModelInstanceUId, dataList, model.PredictionEndpoint, timeout, predictContributions); return response.Outputs; } } }

After making changes, save and publish the schema.

Go to the [Advanced settings] section -> [Configuration] -> Custom package -> the [Schemas] tab. Click [Add] -> [Source code]. Learn more about creating a schema of the [Source Code] type in the “Creating the [Source code] schema” article.

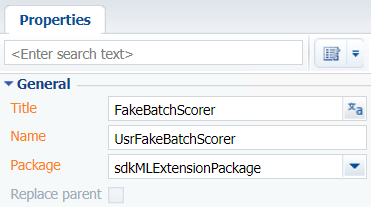

Specify the following parameters for the created object schema (Fig. 5):

- [Title] – "FakeBatchScorer".

- [Name] – "UsrFakeBatchScorer".

Fig. 5. – Setting up the [Source Code] type object schema

To use the batch prediction functionality, implement the IMLBatchPredictor interface, and the FormatValueForSaving and SavePredictionResult methods.

The complete source code of the module is available below:

namespace Terrasoft.Configuration.ML { using System; using Core; using Terrasoft.Core.Factories; using Terrasoft.ML.Interfaces; [DefaultBinding(typeof(IMLBatchPredictor), Name = "319C39FD-17A6-453A-BCEB-57A398D52636")] public class FakeBatchScorer : MLBatchPredictor<ScoringOutput> { public FakeBatchScorer(UserConnection userConnection) : base(userConnection) { } protected override object FormatValueForSaving(ScoringOutput scoringOutput) { return Convert.ToInt32(scoringOutput.Score * 100); } protected override void SavePredictionResult(Guid modelId, Guid modelInstanceUId, Guid entityId, ScoringOutput value) { PredictionSaver.SavePrediction(modelId, modelInstanceUId, entityId, value); } } }

After making changes, save and publish the schema.